The previous post introduced DSE Graph and summarized some key considerations related to dealing with large graphs. This post aims to:

- describe the tooling available to load large data sets to DSE Graph

- point out tips, tricks, and key learnings for bulk loading with DSE Graph Loader

- provide code samples to simplify your bulk loading process into DSE Graph

Tooling

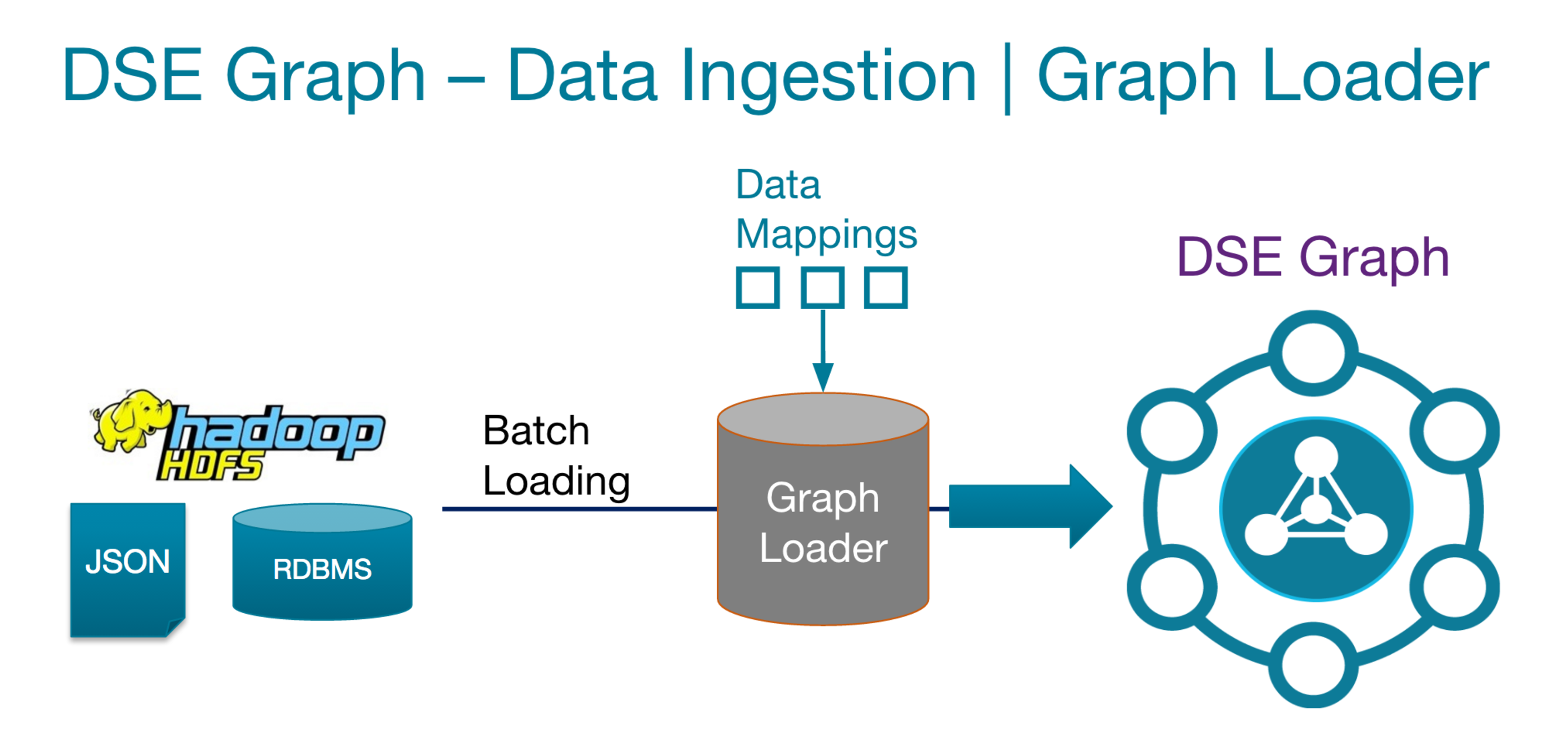

DSE Graph Loader (DGL) is a powerful tool for loading graph data into DSE Graph. As shown in the marchitecture diagram below, the DGL supports multiple data input sources for bulk loading and provides high flexibility for manipulating data on ingest by requiring custom groovy data mapping scripts to map source data to graph objects. See the DataStax docs which cover the DGL, it's API, and DGL mapping scripts in detail.

This article breaks down the tactics for efficient data loading with DGL into the following areas:

- file processing best practices

- mapping script best practices

- DGL configuration

Code and Tactics

Code to accompany this section can be found at:

https://github.com/phact/rundgl

Shout out to Daniel Kuppitz, Pierre Laporte, Caroline George, Ulisses Beresi, Bryn Cooke among others who helped create and refine this framework. Any bugs / mistakes are mine.

The code repository consists of:

- a wrapper bash script that is used for bookkeeping and calling DGL

- a mapping script whith some helpful utilities and * a generic structure that can be used as a starting point for your own custom mapping scripts

- a set of analysis scripts that can be used to monitor your load

The rest of this article will be focused on DGL loading best practices linking to specific code in the repo for clarity.

File Bucketing

The main consideration to take when loading large data volumes is that DGL performance will suffer if fed too much data in a single run. At the time of this article (2/28/2017), the DGL has features designed for non-idempotent graphs (including deduplication of vertices via an internal vertex cache) that limit its performance with large idempotent graphs.

Splitting your loading files into chunks of about ~120Million or fewer vertices will ensure that the DGL does not lock up when the vertex cache is saturated.

UPDATE: In modern versions of the graph loader, custom vertices are no longer cached. This means there should not be a performance impact of large files for this kind of load. Chunking may still be useful for percentage tracking / restarting / etc.

The rundgl script found in the repo is designed to traverse a directory and bucket files, feeding them to DGL a bucket at a time to maximize performance.

Track your progress

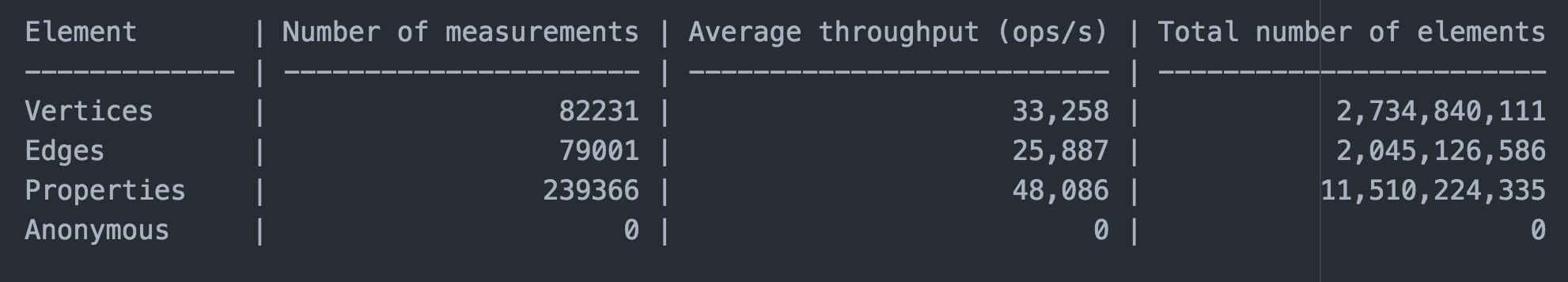

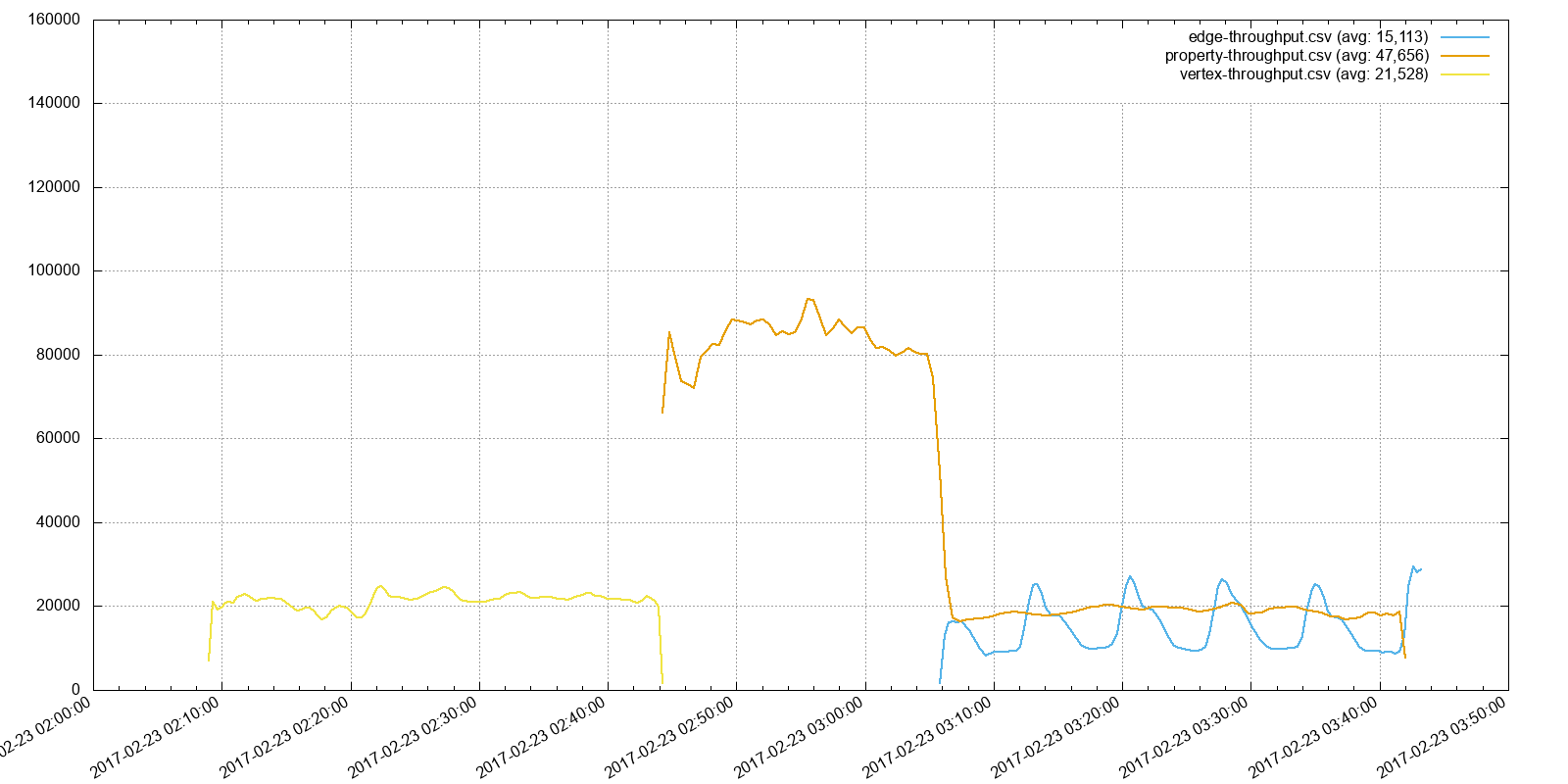

The analysis directory in the rundgl repo contains a script called chart. This script will aggregate statistics from the DGL loader.log and generate throughput statistics and charts for the different loading phases that have occurred (Vertices, Edges, and Properties).

Note - these scripts have only been tested with DGL < 5.0.6

Navigate to the analysis directory and run ./charts to get a dump of the throughput for your job:

It will also start a simple http server in the directory for easy access to the png charts it generates, here is an example chart output:

Thank you Pierre for building and sharing the analysis scripts

Monitor DGL errors

When the DGL gets timeouts from the server it does not log them to STDOUT, they can only be seen in logger.log. In a busy system, it is normal to see timeouts. These timeouts will be handled by retry policies which are baked into the loader. Too many timeouts may be a sign that you are overwhelming your cluster and need to either slow down (reduce threads in the loader) or scale out (add nodes in DSE). You will know if timeouts are affecting your cluster if your overall throughput starts trending down or if you are seeing backed up threadpools or dropped mutations in OpsCenter.

Aside from Timeouts, you may also see errors in the DGL log that are caused by bad data or bugs in your mapping script. If enough of these errors happen the job will stop. To avoid having to restart from the beginning on data related issues, take a look at the bookeeping section below.

Don't use S3

If you are looking to load significant amounts of data, do not use S3 as a source for performance reasons. It will take less time to parallel rsync your data from s3 to a local SSD and then load it than to load directly from s3.

DGL does have S3 hooks and from a functionality perspective it works quite well (including aws authentication) so if you are not in a hurry, the repo also includes an example for pulling data from S3. Just be aware of the performance overhead.

Groovy mapper best practices

Custom groovy mapping scripts can be error prone and the DGL's error messages sometimes leave a bit to be desired. The framework provided aims to simplify the loading process by providing a standard framework for all your mapping scripts and minimizing the amount of logic that goes into the mapping scripts.

Use the logger

DGL mapping scripts can use the log4j logger to log messages. This can be very useful when troubleshooting issues in a load, especially issues that only show up at runtime with a particular file.

It also allows you to track when particular loading events occur during execution.

INFO, WARN, and ERROR messages will be logged to logger.log and will include a timestamp.

DGL takes arguments

If you need to pass an argument to the groovy mapper just pass it with - <argname> in the command line.

The variable argname will be available in your mapping script.

For example, ./rundgl passes -inputfilename to DGL here leveraging this feature. You can see the mapping script use it here.

The argument inputfilename is a list of files to process to the DGL. This helps us avoid having to do complex directory traversals in the mapping script itself.

By traversing files in the wrapper script, we are also able to do some bookeeping.

Bookeeping

loadedfiles.txt tracks the start and end of your job as well as the list of files that were loaded and when each particular load completed. This also enables us to "resume" progress by modifying the STARTBUCKET.

STARTBUCKET represents the first bucket that will be processed by ./rundgl, if you stopped your job and want to continue where you left off, count the number of files in loadedfiles.txt and divide by the BUCKETSIZE, this will give you the bucket you were on. Starting from that bucket will ensure you don't miss any files. Since we are working with idempotent graphs, we don't have to worry about duplicates etc.

DGL Vertex Cache Temp Directory

Especially when using EBS, make sure to move the default directory of the DGL Vertex Cache temp directory.

IO contention against the mapdb files used by DGL for its internal vertex cache will overwhelm amazon instances' root EBS partitions

Djava.io.tmpdir=/data/tmp

Leave search for last

If you are working on a graph schema that uses DSE Search indexes, you can optimize for overall load + indexing time by loading the data without the search indexes first and then creating the indexes once the data is in the system.

Some screenshots

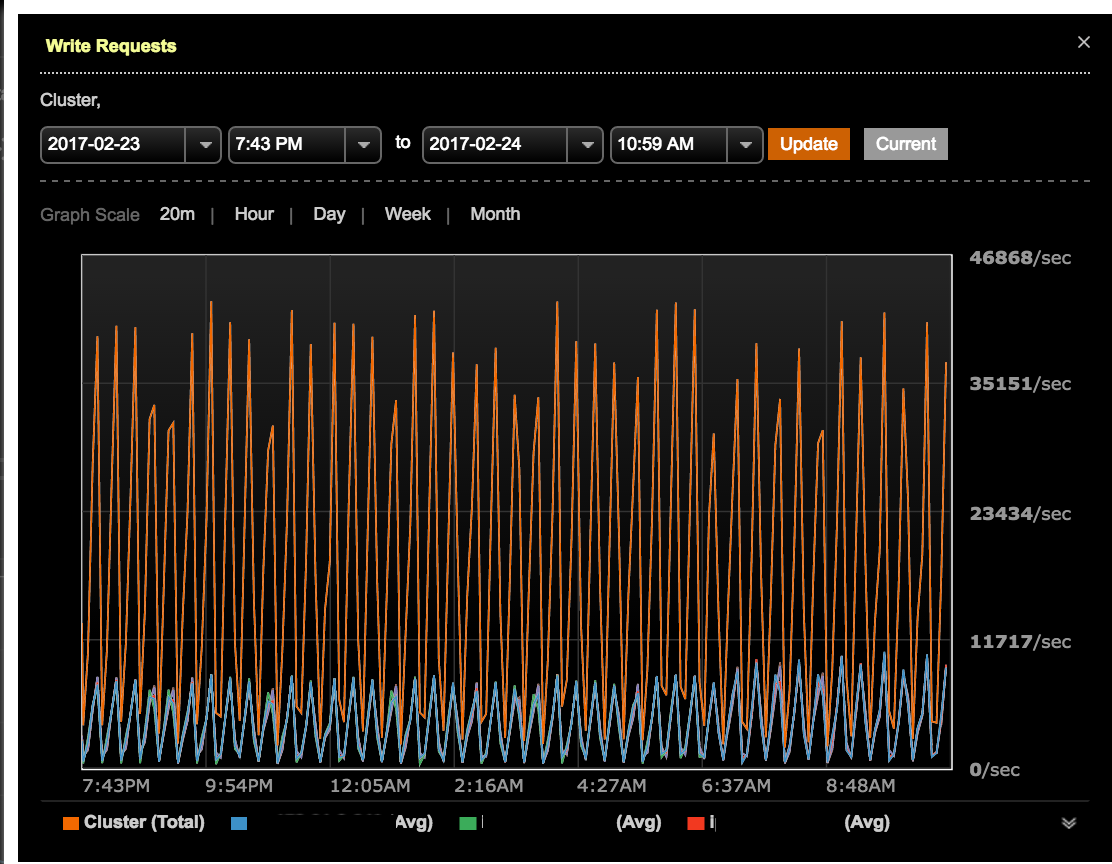

Here are some screenshots from a system using this method to load billons of vertices. The spikes are each run of the DGL kicked off in sequence by the rundgl mapping script. You can see the load and performance are steady throughout the load giving us dependable throughput.

Like with other DSE workloads, if you need more speed, scale out!

Throughput:

OS Load:

Conclusion

With the tooling, code, and tactics in this article you should be ready to load billions of V's, E's, and P's into DSE graph. The ./rundgl repo is there to help with error handling, logging, bookeeping, and file bucketing so that your loading experience is smooth. Enjoy!