Last November, the week before my daughter was born, OpenAI released the Assistants API. I tried building an app with it and was impressed with the simplicity and power of the abstraction so I decided to start sleep deprivation early and built v0 of `astra-assistants`.

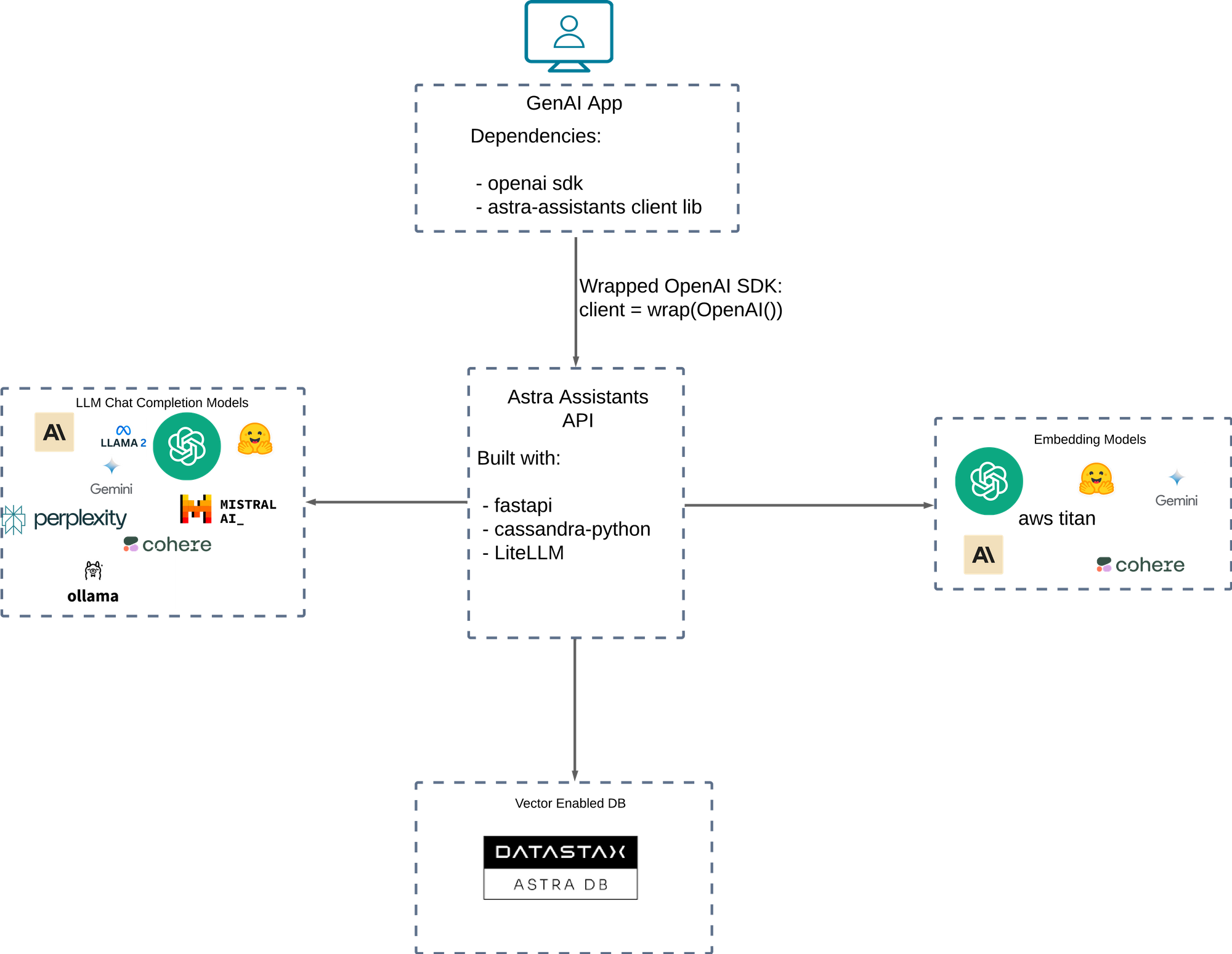

Astra Assistants is a drop in replacement for OpenAI's Assistants API that supports third party LLMs and embedding models and uses AstraDB / Apache Cassandra for persistence and ANN. You can use our managed service on Astra or you can host it yourself since it's open source.

Note: If you like the project please give us a github star!

As you can see below, you can simply patch your OpenAI client with the assistants client library and pick your model. This will point your app at our managed astra-assistants service instead of at OpenAI.

Astra Assistants will automatically route LLM and embedding calls to your model provider of choice using LiteLLM and it will persist your threads, messages, assistants, vector_stores, files, etc. to AstraDB. File search leverages AstraDB's vector functionality powered by jvector.

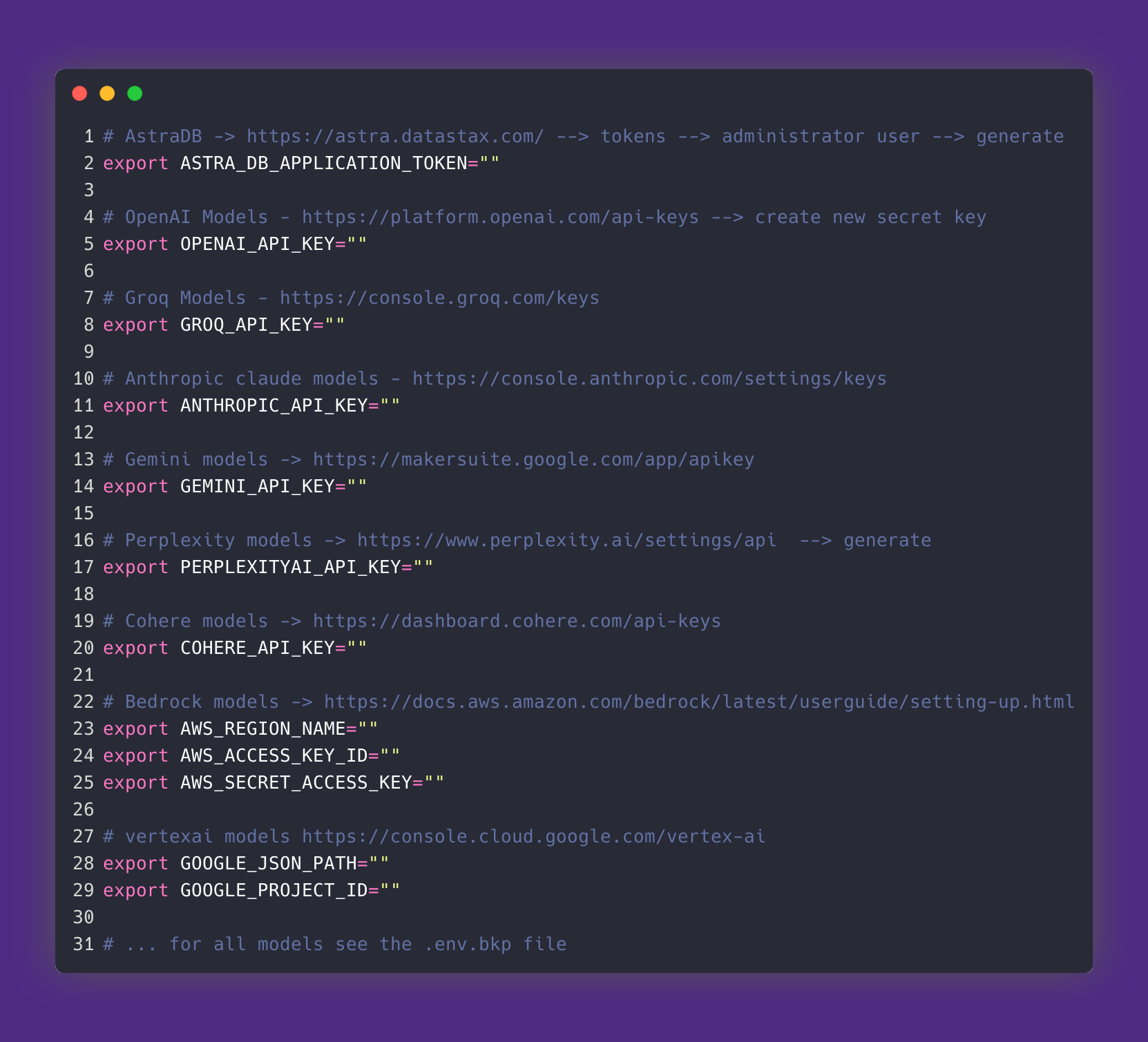

For authentication you must provide corresponding api keys for the model provider(s). We recommend using environment variables in a .env file which automatically get picked up and sent to the astra-assistants service as http request headers by the astra-assistants client library when you patch the OpenAI sdk.

Architecture

Astra Assistants is a python project built on fastapi that implements the backend for Assistants API using the Cassandra python driver and LiteLLM.

If you run astra-assistants yourself you can even point to your local ollama setup for use with open source models.

Release and improvements

We launched the service on November 15th 2023:

We added streaming support in February 2024 (before OpenAI):

We open sourced the server side code in March of 2024

And we added support for assistants v2 (including vector_stores) in June of 2024.

Conclusion

It's been a ton of fun working on Astra Assistants and I'll continue to post updates here so stay tuned!

If you like the project please give us a github star!